-

Viewpoint on 'IONS'

Viewpoint on 'Scientific Literacy'

- Proudly sponsored by

-

-

Much More than a Contact Lens

Super contact lenses which display background information onto your real world view seem like a gadget taken from the latest Spielberg movie. Thanks to a recently developed technique, this scenario may soon be real.

-

Forging Quantum Teams

A rowing team consists of a given number of athletes; adding or subtracting one would make it impossible for the team to compete. Recent experiments have shown that conditions exist under which quantum particles can also team up in a controlled number.

-

Bionic Lasers

In the dawn of the third Millennium, lasers are fast becoming man’s best friend. Be that as it may, the world still perceives them as cold, lifeless devices. Can that image be shaken off, or even turned around? Can lasers be perceived as something that is, on the contrary, warm and full of life?

Volume 12 Story 7 - 10/3/2011

You wake up in the morning, get dressed, grab your e-reader and a freshly brewed coffee, wrangle the kids into the car, tell the navigation system your destinations (“School and then the Office”), and take off. During the commute, you read a chapter of your kids’ favorite book together, send them off to class, watch the latest news from around the world, and buy a Valentine's Day gift online for your spouse. All of this happens within about an hour! In this scenario, the car is driving itself. Engineers at Velodyne in Morgan Hill, California, have patented an advanced LIDAR technology that uses multiple spinning lasers to help automated vehicles see their surroundings faster than ever before. Their mobile mapping systems are advancing the vision, both literally and figuratively, for the future of driving.

For a vehicle to drive itself, it has to know a lot about its surroundings. Prototype driverless cars today use a technology called LIDAR, or LIght Detection And Ranging, to create local maps around the car in real-time. LIDAR is based on the same concept as RADAR, but it uses laser light instead of radio waves. The car sends out a pulse of light in a certain direction, and an on-board sensor records the reflected pulse's time-of-flight. By sending out laser beams in all directions, collecting the reflected energy, and performing some nifty high-speed computer processing, the vehicle can create a real-time, virtual map of the obstacles in its path. Because laser light is higher in energy and shorter in wavelength than radio waves, it reflects better from non-metallic objects and provides mapping advantages over RADAR. By coupling novel roof-mounted LIDAR systems with vision cameras, advanced computer processing, and GPS to position the vehicle in global coordinates, it has become possible to create a self-driving machine.

There is some dispute over who first proposed the autonomous vehicle concept, though the earliest working prototypes came from teams in Japan and Germany. In 1977, the Tsukuba mechanical engineering lab in Japan built a prototype that drove a special course at up to 30 km/h. In 1980, Mercedes-Benz unveiled a vision-guided van that achieved speeds of up to 100 km/h on streets with no traffic. Also in the 1980s, the US DARPA-funded Autonomous Land Vehicle was the first vehicle to carry a LIDAR system onboard. DARPA later created the Grand and Urban Challenges to spur autonomous vehicle development, and the top two finishers from Carnegie Mellon University and Stanford University attributed their success in the competition to advanced LIDAR technology from a company called Velodyne.

When typical LIDAR systems were using a single spinning laser beam to generate up to 200,000 map points per second, Velodyne founder David Hall designed a system called the HDL-64E, that used 64 spinning lasers and accumulated 1.3 million points per second. In the context of imaging, this factor of six data increase is analogous to the difference between first-generation cell phone cameras and today's low-end camera phones. "The HDL-64E provided the autonomous vehicles’ perception and navigation systems with sufficient information about their environment to render all other ranging sensors unnecessary," says Brent Schwarz of Velodyne.

Despite the key advances in LIDAR technology, self-driving cars still face many challenges. For autonomous vehicles to succeed in a public environment like New York City, they need to be able to interpret the sometimes irrational behavior of human beings. Pedestrians jaywalking, bikers weaving in between traffic, little potholes, ice on the road surface, and so on... These all challenge the perception and intelligence of the vehicle. Also, in the event of an accident, who is responsible? The owner of the vehicle? The designer of the vehicle's sensors? The software designer? "Given the cost and legal liability challenges of fielding unmanned vehicles, I don’t anticipate seeing true self-driving cars on public streets in my lifetime," says Schwarz. There is more immediate potential for autonomous vehicles in performing dirty or dangerous jobs in controlled environments. Just as IT professionals can control multiple computer servers and workstations remotely, autonomous vehicle professionals will soon be able to operate heavy machinery, transport cargo, or harvest crops from the comfort of a desk. "It will be a gradual evolution," envisions Schwarz, "starting with tele-operated machines, subsequently moving towards supervised autonomy (one human operator monitoring multiple autonomous machines), before full autonomy is achieved."

LIDAR in the Driver's Seat

New devices based on a concept similar to that of RADAR could revolutionize your daily commute. Light detection and ranging (LIDAR) technologies are providing the vision for a new generation of driverless vehicles.

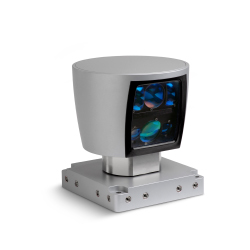

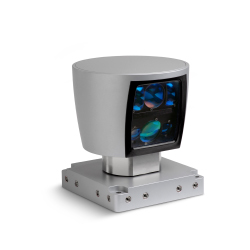

The sensor. The system at the core of LIDAR technology is a sensor called the HDL-64E, that uses 64 spinning lasers and accumulates 1.3 million points per second in order to reconstruct a virtual map of its surroundings. Picture courtesy: Velodyne Inc.

For a vehicle to drive itself, it has to know a lot about its surroundings. Prototype driverless cars today use a technology called LIDAR, or LIght Detection And Ranging, to create local maps around the car in real-time. LIDAR is based on the same concept as RADAR, but it uses laser light instead of radio waves. The car sends out a pulse of light in a certain direction, and an on-board sensor records the reflected pulse's time-of-flight. By sending out laser beams in all directions, collecting the reflected energy, and performing some nifty high-speed computer processing, the vehicle can create a real-time, virtual map of the obstacles in its path. Because laser light is higher in energy and shorter in wavelength than radio waves, it reflects better from non-metallic objects and provides mapping advantages over RADAR. By coupling novel roof-mounted LIDAR systems with vision cameras, advanced computer processing, and GPS to position the vehicle in global coordinates, it has become possible to create a self-driving machine.

A driverless car. The Spirit of Berlin is an autonomous car based on the HDL-64E sensor, designed by the Artificial Intelligence Group at the Freie Universität Berlin in 2007. Picture courtesy: AutoNOMOS Project.

When typical LIDAR systems were using a single spinning laser beam to generate up to 200,000 map points per second, Velodyne founder David Hall designed a system called the HDL-64E, that used 64 spinning lasers and accumulated 1.3 million points per second. In the context of imaging, this factor of six data increase is analogous to the difference between first-generation cell phone cameras and today's low-end camera phones. "The HDL-64E provided the autonomous vehicles’ perception and navigation systems with sufficient information about their environment to render all other ranging sensors unnecessary," says Brent Schwarz of Velodyne.

Despite the key advances in LIDAR technology, self-driving cars still face many challenges. For autonomous vehicles to succeed in a public environment like New York City, they need to be able to interpret the sometimes irrational behavior of human beings. Pedestrians jaywalking, bikers weaving in between traffic, little potholes, ice on the road surface, and so on... These all challenge the perception and intelligence of the vehicle. Also, in the event of an accident, who is responsible? The owner of the vehicle? The designer of the vehicle's sensors? The software designer? "Given the cost and legal liability challenges of fielding unmanned vehicles, I don’t anticipate seeing true self-driving cars on public streets in my lifetime," says Schwarz. There is more immediate potential for autonomous vehicles in performing dirty or dangerous jobs in controlled environments. Just as IT professionals can control multiple computer servers and workstations remotely, autonomous vehicle professionals will soon be able to operate heavy machinery, transport cargo, or harvest crops from the comfort of a desk. "It will be a gradual evolution," envisions Schwarz, "starting with tele-operated machines, subsequently moving towards supervised autonomy (one human operator monitoring multiple autonomous machines), before full autonomy is achieved."

Stefano Young

2011 © Optics & Photonics Focus

SY is currently a graduate student at the University of Arizona’s College of Optical Sciences.

Brent Schwarz, LIDAR: Mapping the world in 3D, Nature Photonics (2010) 4, 429-430 (link).